Components¶

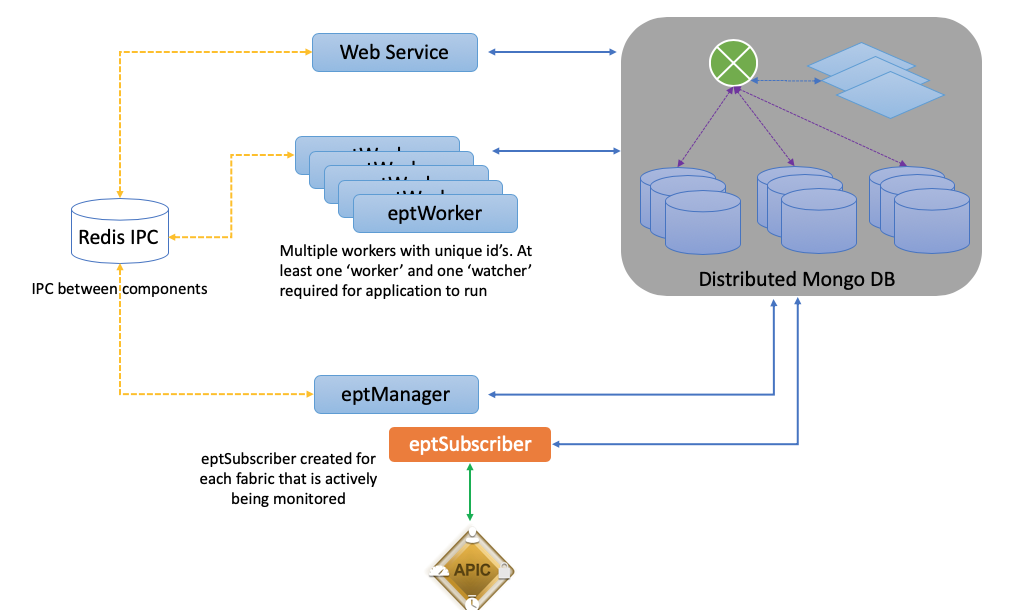

The EnhancedEndpointTracker app is composed of several components that can be deployed as either

multiple processes running within the same container in mini or all-in-one mode or as

separate containers distributed over multiple nodes in full or cluster modes.

mongoDB¶

mongoDB3.6is used for persistent storage of data. Inminimode this is a single mongo process with journaling disabled and wireTiger cache size limit to 1.5G memory. Inclustermode, it runs as a distributed database utilizing mongos, configsvr in replica set, and multiple shards configured in replica sets. Sharding is enabled for a subset of collections, generally based on endpoint address.

redisDB¶

redisDB is in an memory key-store database. It is used as a fast IPC between components. There are two main messaging implementations in this app.

eptManager¶

eptManageris a python process that is responsible for starting, stopping, and monitoringeptSubscriberproceses along with tracking the status of all availableeptWorkerprocesses. It is also responsible queuing and distributing all work that is dispatched to worker processes. There is only a single instance ofeptManagerdeployed within the app.

eptSubscriber¶

eptSubscriberis a python process responsible for communication with the APIC. It collects the initial state from the APIC and stores into the db. It establishes and monitors a websocket to the APIC with subscriptions for all necessary MOs and ensures the db is in sync with the APIC.eptSubscriberprocess also subscribes to all epm events and dispatches each event toeptManagerwhich will enqueue to an appropriateeptWorkerprocess to analyze the event. There is a singleeptSubscriberprocess running for each configured fabric. This process is always a subprocess running in the same container aseptManager.The following objects are collected and monitored by the subscriber process:

- datetimeFormat

- epmIpEp

- epmMacEp

- epmRsMacEpToIpEpAtt

- fabricAutoGEp

- fabricExplicitGEp

- fabricNode

- fabricProtPol

- fvAEPg

- fvBD

- fvCtx

- fvIpAttr

- fvRsBd

- fvSubnet

- fvSvcBD

- l3extExtEncapAllocator

- l3extInstP

- l3extOut

- l3extRsEctx

- mgmtInB

- mgmtRsMgmtBD

- pcAggrIf

- pcRsMbrIfs

- tunnelIf

- vnsEPpInfo

- vnsLIfCtx

- vnsRsEPpInfoToBD

- vnsRsLIfCtxToBD

- vpcRsVpcConf

eptWorker¶

There is a configurable number ofeptWorkerprocesses that can be executed. EacheptWorkermust have a unique id and will be deployed with a role of either a worker or watcher process.eptManagerrequires at least one activeeptWorkerfor all roles before it can start any fabric monitors. TheeptWorkerworker process performs the bulk of the computations for the app. It receives epm events and performs move/offsubnet/stale/rapid analysis and stores the results into the db. If an endpoint is flagged by one of the analyses, a message is sent toeptManagerto enqueue to aneptWorkerwatcher process. The watcher will perform the configure notifications along with executing rechecks to prevent incorrect detection of transitory events.

The full source code for the Flask web-service implementation and all ept components is available on Github.